Smart Security Camera

Smart Security Camera

(with attendance on Google Sheets)

Summary

The AIM of the project is to make a smart camera that can monitor your house, office, etc and

give you valuable data like keeping track of who is on the door, at when did any person arrive, click photographs on unknown people and only open the smart lock (electromagnetic based) when a known person is at the door.

Hardware Requirements

-Raspberry Pi (any version will do for best results 4B(2GB) was used)

–Raspberry Pi CSI Camera

-Power Bank

-SD card(flashed with latest raspbian)

-Relay (for controlling door lock)

Software Requirements

-PIP (install it along with python)

-GIT

-Clone my Github repository – http://github.com/aabhassenapati/smart_security_camera

and unzip the file on the raspberry pi.

Bringing the Project into Real Life

There are two main parts of this project one is Face Recognition using OpenCV and the other is sending the onto Google Sheets and the most difficult task is to integrate these two together.

So to start building this project first we need to set up the requirements for OpenCV face recognition

so hook up to the terminal and run following commands one by one

- $ sudo apt-get install libhdf5-dev libhdf5-serial-dev libhdf5-100

- $ sudo apt-get install libqtgui4 libqtwebkit4 libqt4-test python3-pyqt5

- $ sudo apt-get install libatlas-base-dev

- $ sudo apt-get install libjasper-dev

- $ wget https://bootstrap.pypa.io/get-pip.py

- $ sudo python3 get-pip.py

- $ pip install virtualenv virtualenvwrapper

- $ mkvirtualenv cv -p python3

- $ workon cv

- $ pip install opencv-contrib-python

- $ pip install dlib

- $ pip install face_recognition

- $ pip install imutils

after then we need to unzip the repository and root to its directory

- $ workon cv

- $ cd smart_security_camera

- $ tree

you need to then replace the dataset with your desired dataset 5 images per person is sufficient, then you need to run the encode_faces.py

- $ python encode_faces.py –dataset dataset –encodings encodings.pickle

- –detection-method hog

the next step is to get the client_secret.json file from google sheets API to use it to upload data onto google sheets by following the steps below, then place the client_secret.json file in the smart_security_camera directory.

The next step is to get the Spreadsheet ID and Sheet ID of your google sheet worksheet where you want the data and replace it in the code in face_reconition.py and sheet.py files.

the next step is to connect the relay 5v and GND pin with VCC and GND of raspberry pi and signal pin of the relay with GPIO pin 18 and connect it with your electromagnetic lock as a switch connection

NOW you are ready with all required installations and then you need to run both python files to get the smart security camera working. In a new terminal type following commands

- $ workon cv

- $ cd smart_security_camera

- $ python pi_face_recognition.py –cascade haarcascade_frontalface_default.xml

- –encodings encodings.pickle

- $ workon cv

- $ cd smart_security_camera

- $ python sheet.py

Working VIDEO Demonstration

(please bear with bad quality of the video as I had to record with the phone as don’t have a camera so…)

Code and Its basic concept of Working

(earlier the code below had an error with tabs when I paste the code , so if you want code take it from GitHub it has proper tabs , now have added the code using GIST which is correct with tabs)

USAGE

the python script uploading data to google sheets

the relay turns on when a known person is detected

data on google sheets

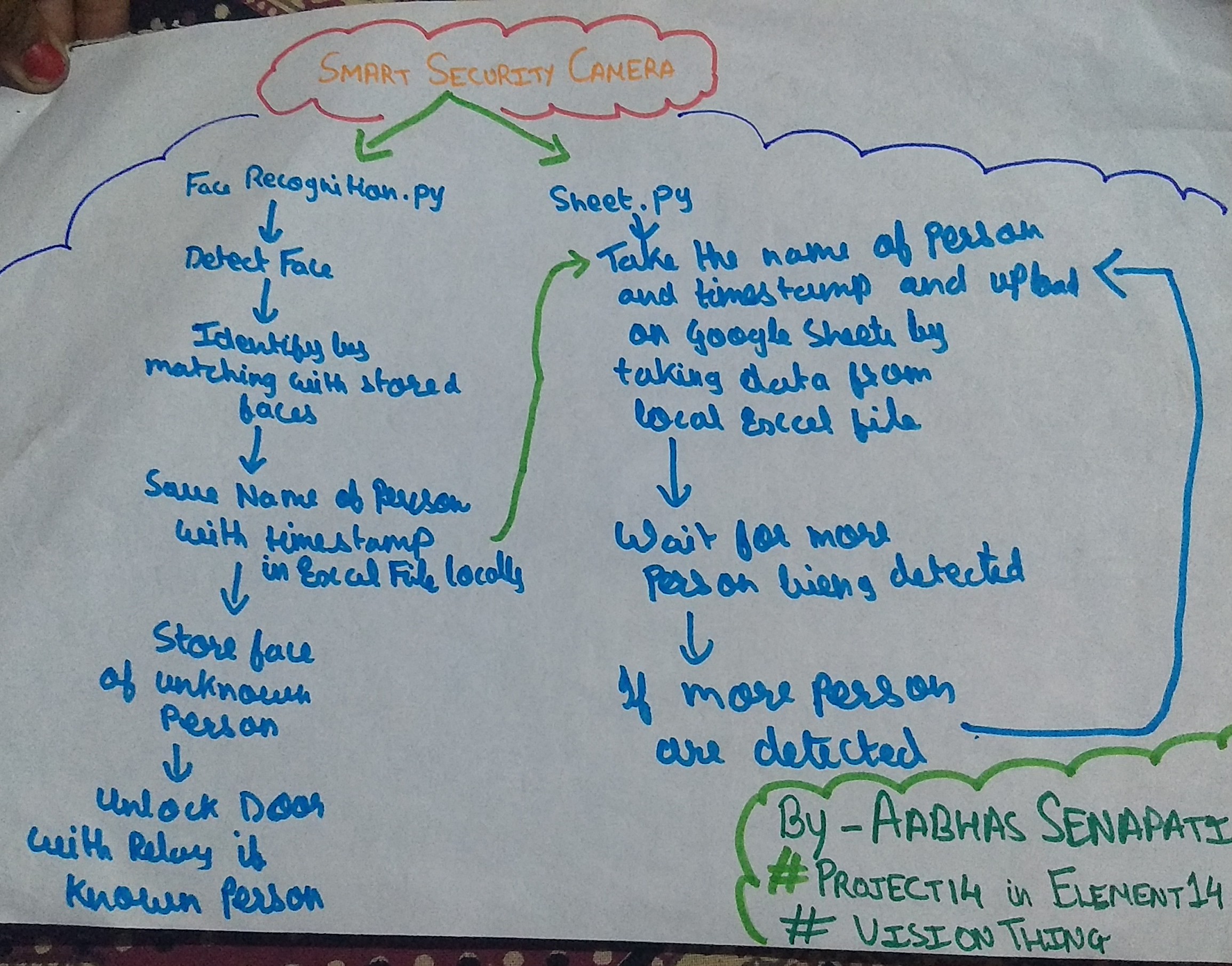

The two essential part of the entire project is the pi_face_recognition.py and sheet.py python file. In the broader aspect, the pi_face_recognition.py file does the face recognition and stores it locally while the sheet.py takes this data and uploads it to Google Sheets.

Working of pi_face_recognition.py:-

The first tas is to use the encodings of the faces and determine that is there any face in the frame, then the next task is that it does is that it checks with each and every face to identify whose face it is or is it an unknown face then it draws a bounding box around the face and if an unknown face is detected it stores that frame with numbering the unknown face serially, then the last task is that it stores this data locally to an excel file.

Working of sheet.py:-

The task of this script is to take the data from the locally saved file and upload it onto Google Sheets with data who was at the door and at what time and date.

Problems and errors faced during coding

-The first problem I was facing was with the installation of libraries which I had to do many times and was successful when I installed libraries on a virtual environment creation method.

-Then the next problem I faced was that after I was successful in using face recognition with python was that when I tried to use the Google sheets API to upload the data, there was an error which said that it was due to improper authentication but I found that the sheet id name was to be changed and client_secret.json was to be obtained.

-Then I was successful in sending the data into google sheets but what I observed was that the frame was very very low because it depended on the internet connection as it only went to the next frame after the data uploading of the previous frame was complete. Thus I thought that I should store the data locally and then run another script to upload it onto Google Sheets

-The next error I faced was that while doing the storage of data locally onto an excel file it actually was not possible to edit a saved file so I had to learn and used the method in which two files with same data is created and then data is stored by copying the data of one file and adding new data to it and this process continues.

-The final error I was facing was that the code terminated after some time as the no person was detected and it reached the end of file while parsing so then I had to get the number of rows from face recognition script and use that as a condition to wait until more rows are added .(earlier I thought that the problem of termination of code can be solved by running the periodically with crontab but was not good approach as it caused repetition of data being uploaded ).

I learned a lot about face recognition with open cv, using Google API’s and also using excel files in python while making this project overall it was a very great learning experience making and troubleshooting with this project.

Some references which were used to make the project are:-

ANY COMMENTS, IMPROVEMENTS, AND QUERIES ARE WELCOMED IN THE COMMENTS BELOW

I would also like to thank danzima for the Raspberry Pi 4 and tariq.ahmad for the Beaglebone AI

One Response

Hi Aabhas!

This is a very useful and simple project.

Keep it up!

Aryan.